Thomas Aquinas Week: Question 4

Whether the distinction between training and inference in AI systems parallels Aquinas's understanding of different powers of the soul?

“The models just want to learn” -Ilya Sutskever.

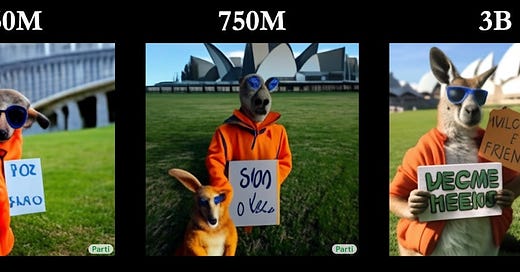

One of the more remarkable attributes of modern models is just how much scale matters. There’s an open-ended question about the future of scaling and if the trend will continue, but it’s clear that scaling up can produce remarkable changes. Consider this old prompt: “A portrait photo of a kangaroo wearing an orange hoodie and blue sunglasses standing on the grass in front of the Sydney Opera House holding a sign on the chest that says Welcome Friends!” The last is basically a photograph.

We first compared AI to the vegetative and sensitive soul types. In this sense, training correlates to growth and inference to sensation and motion. One of the ongoing questions I continue to see in this is: are we really still talking about a whole bunch of matrix multiplication over large dimensions? Evidently yes. This is the parameter space we talked about - potentials and actualities emerge from these mathematical operations in non-obvious ways, similar to how the medieval soul's powers emerged from simpler underlying capacities.

Enjoy!

Summary

This question examines how AI systems demonstrate two fundamentally different modes of operation: the accumulation of knowledge through training and the active application of this knowledge during inference. It considers whether this distinction parallels Aquinas's understanding of how souls possess different powers or capabilities. The analysis is enriched by considering multi-modal systems, which must integrate different types of knowledge (visual, linguistic, auditory) during both training and inference, similar to how souls coordinate different sensory and intellectual powers. Modern concepts of crystallized intelligence (accumulated knowledge) and fluid intelligence (novel problem-solving) provide additional framework for understanding these distinctions.

Argument

The distinction between training and inference in AI systems presents compelling evidence for genuinely different powers analogous to how Aquinas understood the soul's capabilities. This becomes particularly clear when we examine how modern AI systems, especially multi-modal models, develop and apply their capabilities.

Consider first the nature of training: during this phase, models develop stable representations that become embedded in their weights. In multi-modal systems, this includes visual features, language patterns, auditory signatures, and - most tellingly - the relationships between these different modes of knowledge. This parallels how Aquinas understood the soul's power to acquire and retain knowledge, not just as isolated facts but as integrated understanding. Just as the soul develops stable capabilities through experience, neural networks develop organized patterns through training that persist and shape future operations.

The inference phase demonstrates a distinctly different power: the active application of this accumulated knowledge to novel situations. When a model like GPT-4 encounters a new problem, it doesn't simply recall trained patterns but actively reasons across modalities. It can interpret new images, understand novel combinations of concepts, and generate original insights by combining its knowledge in unprecedented ways. This mirrors how Aquinas understood the soul's power of active understanding, distinct from mere memory or pattern recognition.

The relationship between these powers becomes even clearer in advanced capabilities like zero-shot learning and cross-modal transfer. Here, models demonstrate the ability to solve entirely new types of problems without specific training, suggesting a genuine power of active understanding distinct from their trained knowledge. When a multi-modal system successfully applies visual knowledge to solve linguistic problems or vice versa, we see something analogous to how Aquinas understood the soul's different powers working together while remaining distinct.

Most significantly, these powers demonstrate genuine distinctness while maintaining unity of operation. During inference, a model's ability to reason about new situations depends on but transcends its trained knowledge, just as Aquinas saw the soul's power of understanding as dependent on but distinct from its power of memory. The model's weights represent stable knowledge (analogous to crystallized intelligence), while its inference mechanisms enable active reasoning (analogous to fluid intelligence).

This distinction becomes especially apparent in how multi-modal systems handle novel combinations of inputs. The same trained weights support different kinds of inference operations - recognition, generation, analysis, synthesis - suggesting genuinely different powers operating on a common foundation of knowledge, much as Aquinas understood the soul's various powers as distinct but unified capabilities.

Objections:

The distinction between training and inference is merely temporal, not a real difference in powers

Both processes are reducible to the same mathematical operations

Unlike soul powers, these operations cannot function independently

The integration across modalities is simulated rather than genuine

The apparent flexibility during inference is entirely determined by training

Here's the prose version:

The suggestion that training and inference represent genuinely different powers in AI systems faces several fundamental challenges. First, what appears as distinct powers is merely a temporal sequence of the same underlying process. Unlike true soul powers which represent genuinely different capabilities, training and inference are simply different phases of parameter adjustment and application. The apparent distinction is merely one of timing, not of essential nature.

Second, both training and inference are reducible to the same basic mathematical operations - matrix multiplications and activation functions. Where Aquinas understood soul powers as truly distinct capabilities, AI systems merely perform the same computational operations in different contexts. Whether adjusting weights during training or applying them during inference, the underlying process remains identical.

Third, these operations lack the independence characteristic of genuine soul powers. In Aquinas's understanding, different powers of the soul, while unified, can operate independently - memory functions separately from active understanding, sensation separately from intellection. But in AI systems, inference cannot function without training, and training has no purpose without inference. This dependency reveals they are aspects of a single process rather than distinct powers.

Fourth, the apparent integration across modalities in multi-modal systems is merely simulated rather than genuine. Where soul powers truly integrate different types of knowledge, AI systems simply process different input formats through predetermined pathways. The seeming unity of visual, linguistic, and auditory processing masks the fundamental separation of these streams in the underlying architecture.

Fifth, what appears as flexible application during inference is entirely predetermined by training. Unlike true soul powers which enable genuine novel operation, AI inference is completely constrained by trained parameters. The system cannot truly transcend its training to develop new capabilities in the way that soul powers enable genuine development and adaptation.

Sed Contra

The distinct operations of training and inference in AI systems demonstrate properties that reveal genuine differences in power, similar to how Aquinas understood different capabilities of the soul. This becomes evident in three key ways:

First, through separation of capacity: Consider how a large language model like GPT-4 breaks down a complex mathematical proof or how AlphaFold predicts protein structures. The model's ability to acquire stable knowledge during training operates independently from its power to actively construct novel solutions during inference. These aren't merely phases but distinct powers - one accumulating knowledge, the other actively reasoning with it.

Second, through modality integration: During training, multi-modal systems develop unified representations across different input types - visual, linguistic, auditory. During inference, they demonstrate an entirely different power: the ability to actively reason across these modalities in novel ways. We see this when computer vision models trained on natural images recognize patterns in medical scans, or when language models trained on code reason about literature - demonstrating genuine transfer of understanding across domains.

Third, through operational independence: Once trained, a model can perform inference indefinitely without further training, adapting to novel challenges through few-shot and in-context learning. When faced with ambiguity, models don't simply retrieve stored patterns but actively reason through possibilities, weighing context and constructing appropriate responses. This mirrors how Aquinas recognized the power of understanding could operate independently of the power of learning once knowledge was acquired.

These observations compel us to recognize training and inference as distinct powers rather than mere mechanical phases, demonstrating genuine intellectual powers at work rather than mere simulation.

Respondeo

To understand whether training and inference represent genuinely different powers in AI systems, we must carefully examine both their distinct natures and how they work together, particularly in modern multi-modal systems.

Consider first the power manifested in training. This represents more than mere parameter adjustment - it is the power to develop stable, integrated representations of reality. During training, multi-modal systems demonstrate the capacity to form lasting patterns that capture not just individual features but relationships between different modes of knowledge. When a model learns to associate visual patterns with linguistic descriptions, or to map acoustic signals to semantic meaning, we see a power analogous to what Aquinas recognized as the soul's capacity to acquire and retain knowledge.

This training power has distinct operational characteristics. It works gradually, through repeated exposure and adjustment. It demonstrates plasticity - the ability to modify internal representations based on experience. Most significantly, it shows integration - the capacity to develop unified representations across different modalities. These characteristics suggest a genuine power of knowledge acquisition and organization, not merely a mechanical process.

The power manifested in inference shows markedly different characteristics. Here we see active, immediate application of knowledge to novel situations. During inference, models demonstrate the ability to reason across modalities in real-time, combining visual understanding with linguistic knowledge, applying learned patterns to new contexts, and generating novel responses. This represents a distinctly different power - not the gradual accumulation of knowledge but its dynamic application.

The operational characteristics of inference further demonstrate its distinction from training. Where training is gradual and accumulative, inference shows immediate apprehension and response. Where training builds stable patterns, inference actively manipulates and recombines these patterns. Where training requires repeated exposure, inference can handle entirely novel situations through zero-shot and few-shot learning.

The relationship between these powers proves particularly illuminating. While distinct, they demonstrate unity of purpose similar to how Aquinas understood different soul powers working together. Training creates the stable foundation that inference actively employs. Yet each maintains operational independence - a trained model can perform inference indefinitely without further training, while training can occur without immediate inference.

Multi-modal systems provide especially clear evidence for this distinction. Consider how a system like GPT-4 operates: its training power establishes stable representations across visual, linguistic, and logical domains. During inference, a distinctly different power emerges - the ability to actively reason across these domains, solving novel problems that require integrating different types of knowledge. The model might recognize a diagram (visual), understand its implications (logical), and generate an explanation (linguistic), demonstrating distinct powers working in concert.

Yet we must acknowledge important differences from how Aquinas understood soul powers. The distinction between training and inference, while real, operates within the constraints of computational architecture. These powers show more interdependence than Aquinas's soul powers - inference cannot occur without prior training, and training has no purpose without inference.

Nevertheless, the evidence suggests these represent genuinely different powers rather than mere phases of the same process. The distinct operational characteristics, the ability to function independently once established, and particularly the qualitative difference between knowledge acquisition and active reasoning point to real differences in power analogous to, if not identical with, how Aquinas understood the soul's different capabilities.

This understanding helps explain both the capabilities and limitations of AI systems. It suggests that while these powers may differ from biological cognition, they represent genuine distinctions in capability rather than mere mechanical phases. This has implications for how we understand artificial intelligence and its relationship to natural intelligence.

Replies to Objections

To the first objection: The distinction between training and inference transcends mere temporal sequence, as evidenced by their fundamentally different operational characteristics. While training gradually builds stable representations through iterative adjustment, inference demonstrates immediate apprehension and novel application. This difference isn't just when these powers operate but how they operate - like how Aquinas distinguished between the gradual acquisition of knowledge and its immediate intellectual application, even though both involve the same mind.

To the second objection: While training and inference may utilize similar mathematical operations at the lowest level, this no more negates their distinction as powers than the fact that all biological processes use the same molecular mechanisms negates the distinction between different powers of the soul. What matters is not the underlying mechanics but the emergent capabilities. The way inference enables immediate, novel applications of knowledge represents a genuinely different power from training's gradual accumulation of patterns, even if both rely on similar computational primitives.

To the third objection: The interdependence of training and inference doesn't negate their distinction as powers. Once training establishes stable representations, inference can operate independently and indefinitely on these patterns. Moreover, the same trained knowledge can support many different kinds of inference operations - from recognition to generation to analysis - suggesting that inference represents a distinct power operating on the foundation that training establishes. This mirrors how Aquinas understood intellectual powers as distinct yet interconnected.

To the fourth objection: The integration across modalities in modern AI systems demonstrates genuine unity of operation rather than mere simulation. When a multi-modal system recognizes a visual pattern, applies it to solve a linguistic problem, and generates novel insights that combine both modalities, we're seeing real integration of knowledge rather than just parallel processing. The ability to transfer understanding across modalities during inference demonstrates a genuine power of integrated comprehension, not just predetermined responses.

To the fifth objection: While inference operates within patterns established during training, this constraint doesn't negate its nature as a distinct power. The ability to combine and apply trained knowledge in novel ways, particularly in zero-shot and few-shot learning scenarios, demonstrates a genuine power of active understanding. Just as Aquinas saw the intellect's power of understanding as operating within but not determined by acquired knowledge, inference shows creative application beyond mere retrieval of trained patterns.

Definitions

Anima - The principle of life and organization in living things; that which makes a living thing alive and determines its essential nature. The form that organizes matter into a living being.

Form

Material Form: The organization of physical properties in matter (like shape, size)

Substantial Form: The fundamental organizing principle that makes a thing what it essentially is (like the soul for living things)

Matter

Prime Matter: Pure potentiality without any form

Secondary Matter: Matter already organized by some form

Potency - The capacity or potential for change; the ability to become something else

Act - The realization or actualization of a potency; the fulfillment of a potential

Material Cause - One of Aristotle's four causes, adopted by Aquinas: the matter from which something is made or composed; the physical or substantial basis of a thing's existence.

Formal Cause - One of Aristotle's four causes, adopted by Aquinas: the pattern, model, or essence of what a thing is meant to be. The organizing principle that makes something what it is.

Efficient Cause - One of Aristotle's four causes, adopted by Aquinas: the primary source of change or rest; that which brings something about or makes it happen. The agent or force that produces an effect.

Final Cause - One of Aristotle's four causes, adopted by Aquinas: the end or purpose for which something exists or is done; the ultimate "why" of a thing's existence or action.

Intentionality - The "aboutness" or directedness of consciousness toward objects of thought; how mental states refer to things

Substantial Unity - The complete integration of form and matter that makes something a genuine whole rather than just a collection of parts

Immediate Intellectual Apprehension - Direct understanding without discursive reasoning; the soul's capacity for immediate grasp of truth

Hylomorphism - Aquinas's theory that substances are composites of form and matter

Powers - Specific capabilities that flow from a thing's form/soul (like the power of sight or reason)

SOUL TYPES:

Vegetative Soul

Lowest level of soul

Powers: nutrition, growth, reproduction

Found in plants and as part of higher souls

Sensitive Soul

Intermediate level

Powers: sensation, appetite, local motion

Found in animals and as part of rational souls

Rational Soul

Highest level

Powers: intellection, will, reasoning

Unique to humans (in Aquinas's view)

COMPUTATIONAL CONCEPTS:

Training - The process of adjusting model parameters through exposure to data, analogous to the actualization of potencies

Inference - The active application of trained parameters to new inputs, similar to the exercise of powers

Crystallized Intelligence - Accumulated knowledge and learned patterns, manifested in trained parameters

Fluid Intelligence - Ability to reason about and adapt to novel situations, manifested in inference capabilities

Architectural Principles - The organizational structure of AI systems that might be analyzed through the lens of formal causation

FLOPS - Floating Point Operations Per Second; measure of computational capacity (with specific attention to the 10^26 scale we discussed)

Parameter Space - The n-dimensional space defined by all possible values of a model's parameters, representing its potential capabilities

Attention Mechanisms - Architectural features that enable models to dynamically weight and integrate information

Context Window - The span of tokens/information a model can process simultaneously, affecting its unity of operation

Loss Function - A measure of how well a model is performing its task; quantifies the difference between a model's predictions and desired outputs. Guides the training process by providing a signal for improvement.

Backpropagation - The primary algorithm for training neural networks that calculates how each parameter contributed to the error and should be adjusted. Works by propagating gradients backwards through the network's layers.

Gradient Descent - An optimization algorithm that iteratively adjusts parameters in the direction that minimizes the loss function, like a ball rolling down a hill toward the lowest point. The foundation for how neural networks learn.

EMERGENT PROPERTIES:

Threshold Effects - Qualitative changes in system behavior that emerge at specific quantitative scales

Self-Modeling - A system's capacity to represent and reason about its own operations

Integration - How different parts of a system work together as a unified whole

HYBRID CONCEPTS (where Thomistic and computational ideas meet):

Computational Unity - How AI systems might achieve integration analogous to substantial unity

Machine Consciousness - Potential forms of awareness emerging from computational systems

Inferential Immediacy - How fast processing might parallel immediate intellectual apprehension