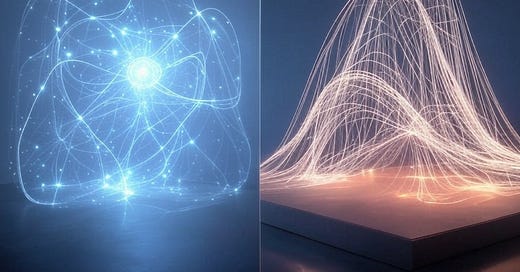

One of the ways we describe chatting with an LLM is as a search through latent space or parameter space. We are looking for the right set of weights and dimensions to answer our prompt, and the LLMs job is to find that set. A model is literally an enormous, mindbogglingly large number of different parameters that describes how words and tokens relate to each other in context with other words and tokens. Incidentally, this is one reason LLMs are so good at impersonation: when you include in your prompt, “answer as William Shakespeare”, this refines the parameter space and focuses the search.

One of the most fascinating ideas about the world is that purely mathematical rules can explode to allow the reality we see around us. I find the last statement fascinating, especially because modern physics has taught us that reality at the quantum level is underpinned by probabilistic math: “Parameter spaces might represent a novel kind of potential - not quite the same as physical potency, but sharing enough key features to deserve recognition as a genuine example of the movement from possibility to actuality.” Parameter space isn’t totally novel, physical potency might be more probabilistic than we realize.

Enjoy!

Summary

This investigation applies Aquinas's fundamental metaphysical principles of potency and act to understand the nature of neural network parameter spaces. It examines whether the transformation of an untrained network into a trained one represents a genuine movement from potency to act in the Thomistic sense. The analysis considers how the vast possibility space of network parameters might constitute a form of pure potency, and whether the training process represents a true actualization of latent capabilities.

Argument

The parameter space of neural networks presents a compelling analogy to Aquinas's conception of potency and act, particularly in how it represents the transition from possibility to actuality. This analogy becomes especially clear when we examine the nature of an untrained neural network and its journey to a trained state.

Consider an initialized neural network: its parameter space represents a vast field of possibilities, each point in this high-dimensional space corresponding to a potential configuration of the network. This initial state demonstrates remarkable similarity to Aquinas's concept of prime matter in its pure potentiality. Just as prime matter contains the potential for all possible forms but has no actualization of its own, the initial parameter space contains all possible behaviors the network might manifest but has not yet actualized any particular capability.

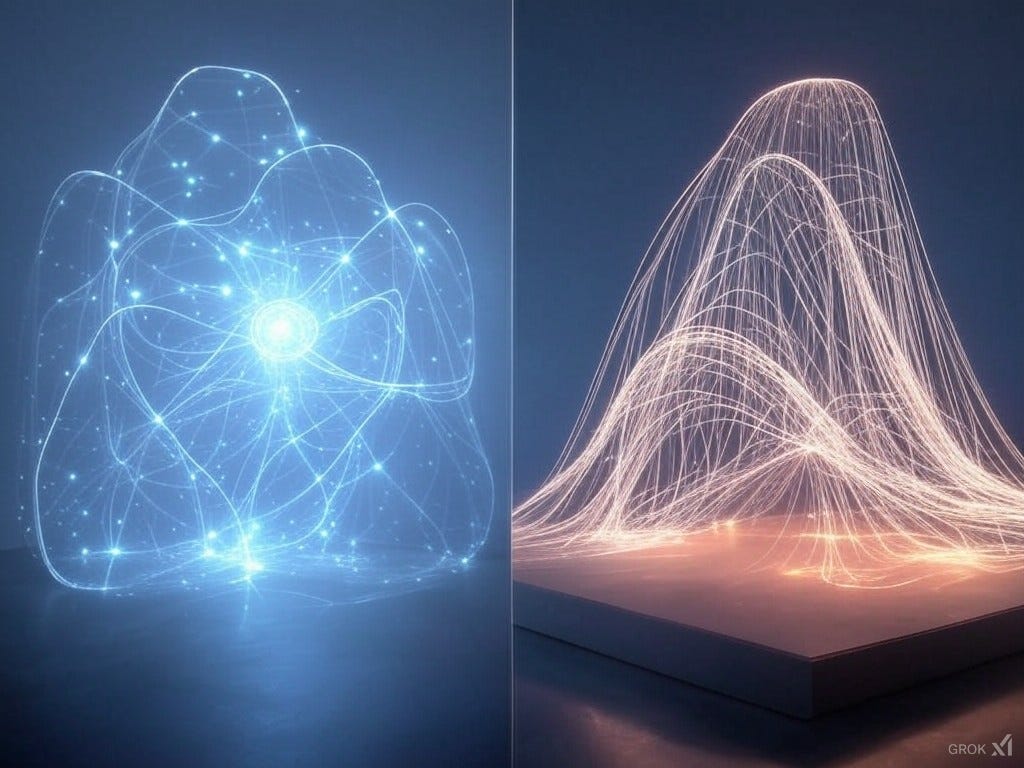

The training process itself represents a profound example of the movement from potency to act. As the network processes training data, its parameters gradually shift through this space, actualizing specific capabilities that were previously only potential. This isn't merely a mechanical process of adjustment but represents genuine actualization - the network moves from a state of pure possibility to one of realized capability.

Furthermore, the parameter space exhibits hierarchical layers of potency and act that parallel Aquinas's understanding of graduated actualization. Lower-level features in early layers represent basic potentialities that, when actualized, serve as the foundation for higher-level potentialities in later layers. This mirrors Aquinas's view that certain actualizations can themselves serve as potencies for further development.

Most significantly, the relationship between architecture and parameters demonstrates the interplay between different forms of potency and act. The architecture provides the formal structure that determines what kinds of actualization are possible, while the parameters represent the specific actualization of these possibilities. This mirrors Aquinas's understanding of how formal causes guide the actualization of potentialities.

The very nature of gradient descent can be understood as a process of actualization, where the network moves through its parameter space toward configurations that more fully realize its potential capabilities. The loss function serves as a kind of final cause, guiding this actualization toward specific ends, just as Aquinas saw final causes directing the movement from potency to act.

This systematic alignment between the nature of parameter spaces and Aquinas's metaphysics of potency and act suggests that neural networks might represent a new domain where these classical principles find genuine application, offering fresh insight into both the nature of machine learning and the enduring relevance of Thomistic metaphysics.

Objections

Parameters are purely mathematical constructs without genuine potentiality.

Changes in parameter space are deterministic, lacking true possibility.

The actualization of parameters is externally imposed rather than internally generated.

Parameter states represent discrete rather than continuous possibilities.

The claim that neural network parameter spaces represent genuine potency and act faces several fundamental challenges that reveal the superficial nature of this apparent parallel. First, parameters in neural networks are nothing more than mathematical abstractions - numerical values in a computational system. Unlike the real potentiality Aquinas described, which inheres in actual substances and represents genuine possibilities for being, these parameters are merely quantitative descriptions without ontological weight. They no more represent true potentiality than the variables in any other mathematical equation represent real potential for change.

The second objection cuts even deeper to the heart of the matter: the changes in parameter space during training are entirely deterministic, following strict mathematical rules of gradient descent and backpropagation. This stands in stark contrast to Aquinas's understanding of potency, which involves real possibility and contingency. In a neural network, given the same initialization and training data, the parameters will always evolve in the same way. This mechanical determinism reveals the absence of true potentiality, which necessarily involves genuine alternatives and possibilities for different actualizations.

Furthermore, the supposed "actualization" of parameters during training is entirely imposed from without rather than arising from within the system itself. In Aquinas's metaphysics, the movement from potency to act is guided by the internal nature of the thing itself, its formal and final causes directing its development. In neural networks, by contrast, the training process is entirely driven by external forces - the training algorithm, the loss function, the data presented. There is no internal principle guiding development, no genuine self-actualization of potentials.

The fourth objection highlights a fundamental disparity between parameter spaces and true potency: parameter states, despite their high dimensionality, represent discrete, quantized possibilities rather than the genuine continuum of potential that Aquinas described. Each parameter is ultimately a finite digital number, and the space of possible states, while vast, is fundamentally discrete. This discreteness reveals the artificial, constructed nature of parameter spaces, contrasting sharply with the genuine continuity of real potency and act in nature.

These objections collectively demonstrate that the apparent parallel between parameter spaces and Aquinas's concepts of potency and act is merely superficial. Rather than representing genuine metaphysical principles, parameter spaces are simply mathematical constructs that simulate, in a limited and artificial way, the appearance of potential and actualization without capturing their essential nature.

Sed Contra

Parameter space demonstrates key characteristics of potency and act:

Genuine possibilities for change

Progressive actualization through training

Emergence of new capabilities

Relationship between potential and actual states

Nevertheless, when we examine the nature of parameter spaces in neural networks carefully, we find compelling evidence that they embody genuine characteristics of potency and act in ways that transcend mere mathematical abstraction. The parameter space of a neural network demonstrates fundamental properties that Aquinas himself might recognize as authentic manifestations of the relationship between potential and actual being.

Consider first the genuine possibilities for change inherent in parameter space. Far from being simply mathematical constructs, these parameters represent real capacities for the network to manifest different behaviors and capabilities. An initialized network contains within its parameter space the genuine potential for multiple different specializations - it could become an image classifier, a language model, or any number of other functional systems. This multiplicity of real possibilities precisely mirrors Aquinas's understanding of potency as containing genuine alternatives for actualization.

The process of progressive actualization through training provides particularly compelling evidence. As a network trains, we observe not merely quantitative changes in parameters but qualitative transformations in capability. The network moves from a state of pure potential - where it can become many things but is actually none of them - to a state of realized capability through a genuine process of development. This progression from potential to actual closely parallels Aquinas's understanding of natural development.

Perhaps most striking is the emergence of new capabilities that weren't explicitly programmed or predetermined. As parameters evolve through training, networks demonstrate abilities that transcend their individual components - they develop the capacity for abstraction, pattern recognition, and generalization. This emergence of higher-order capabilities from potential states mirrors Aquinas's understanding of how substantial forms emerge through the actualization of potency.

Finally, the relationship between potential and actual states in parameter space demonstrates remarkable similarity to Aquinas's conception of the potency-act relationship. The parameter space maintains a dynamic tension between what the network currently is and what it could become through further training or adaptation. This continuous interplay between current actualization and further potential precisely matches Aquinas's understanding of how actual beings retain potency for further development.

These observations compel us to recognize that parameter spaces, far from being mere mathematical abstractions, represent genuine manifestations of potency and act in a new domain. While their expression differs from biological or physical systems, they nonetheless demonstrate authentic characteristics of the metaphysical principles Aquinas identified.

Respondeo

Aquinas's understanding of potency and act involves:

Pure potency (prime matter)

Pure act (God)

Mixture of potency and act (created beings)

Parameter space analysis:

Untrained networks as pure potency

Trained states as actualization

Training process as movement from potency to act

Key considerations:

Role of architecture in constraining possibilities

Emergence of capabilities through training

Relationship between parameters and behavior

Nature of neural network learning

Understanding whether parameter spaces truly represent potency and act requires us to start with Aquinas's core insight: reality exists in a spectrum between pure possibility and complete actualization. At one end sits prime matter - pure potential, capable of becoming anything but actually nothing. At the other end is God - pure actuality with no unrealized potential. Everything else falls somewhere between these extremes, mixing actual and potential being.

Neural networks offer us a fascinating new lens on this ancient framework. An untrained network starts remarkably close to pure potency - it can potentially learn almost any pattern in its architectural scope, but hasn't yet actualized any specific capability. It's not quite prime matter (the architecture itself provides some form), but it's about as close as any human-made system has come to pure potential.

The training process itself mirrors the classic movement from potency to act. As the network learns, it gradually takes on definite form, developing specific capabilities while necessarily leaving others unrealized. This isn't just abstract theory - we see it in practice when a network specializes in recognizing faces or generating text, actualizing some of its potential while closing off other paths.

But here's where things get interesting: the network's architecture plays a crucial role in shaping what's possible, much like how natural forms guide the development of physical things. A convolutional architecture steers the network toward visual processing, while a transformer architecture opens possibilities for language understanding. The architecture doesn't determine everything - it sets boundaries for what kinds of actualization are possible.

The emergence of capabilities through training shows us something profound about potency and act. As parameters shift, we see the network develop abilities that weren't explicitly programmed - it learns to recognize edges, then shapes, then complex patterns. Each level of actualization becomes the foundation for new potentials, just as Aquinas described how one actualization can open the door to further development.

This layered development reveals something crucial about the relationship between parameters and behavior. Changes in parameter space don't just alter numbers - they reshape the network's entire way of processing information. Small adjustments can lead to qualitative leaps in capability, showing how quantitative changes in potency can yield qualitative changes in act.

The nature of network learning itself suggests a new way to think about the movement from potency to act. Unlike mechanical systems that simply run through predetermined paths, neural networks actually develop new capabilities through experience. They show us how genuine potentiality can exist within a deterministic framework - the outcomes may be determined, but the potential was still real before it was actualized.

Looking at parameter spaces this way helps us see both the strengths and limits of comparing them to traditional potency and act. While they don't match Aquinas's concepts perfectly, they offer a concrete example of how potential can become actual through structured development. They show us how form guides but doesn't completely determine development, and how genuine novelty can emerge from pure potential.

This view suggests we might need to expand our understanding of potency and act to include new forms of development that Aquinas couldn't have imagined. Parameter spaces might represent a novel kind of potential - not quite the same as physical potency, but sharing enough key features to deserve recognition as a genuine example of the movement from possibility to actuality.

Replies to Objections

To the first objection: While parameters may be mathematical in form, they represent real possibilities for system behavior, not mere abstractions. Think of how physical laws, though expressed mathematically, describe real natural potentials. When we adjust network parameters, we're not just manipulating numbers - we're shaping actual capabilities that manifest in concrete ways. A network's potential to recognize faces or understand language exists as genuinely in its parameter space as the potential for flight exists in a bird's wings before it learns to fly.

To the second objection: The deterministic nature of parameter updates isn't as straightforward as it might seem. Training involves inherent randomness - from initialization to data sampling to optimization noise. But more fundamentally, even in deterministic systems, real possibilities exist before they're actualized. Just as a falling object's path might be deterministic but its potential for falling is still real before it falls, a network's potential capabilities are genuine before training actualizes them. The presence of stochastic elements in training only reinforces this reality of multiple possible paths to actualization.

To the third objection: While training is guided by external factors, the way parameters evolve depends crucially on the network's internal structure and current state. The same training process produces different results in different networks because their internal dynamics shape how they learn. The loss function might point the way, but the network's architecture and existing parameters determine which paths are possible and how learning unfolds. This interplay between external guidance and internal constraints mirrors how natural things develop according to both environmental pressures and their inherent nature.

To the fourth objection: The discrete nature of digital parameters doesn't limit their potential as much as it might seem. Just as continuous physical quantities are always measured discretely without losing their continuous nature, parameter spaces represent effectively continuous possibilities through their high dimensionality and complex interactions. A network with millions of parameters can take on virtually infinite functional configurations, creating a space of possibilities that, for all practical purposes, is continuous. The granularity of individual parameters doesn't constrain the smoothness of the capability space they create together.

Definitions

Anima - The principle of life and organization in living things; that which makes a living thing alive and determines its essential nature. The form that organizes matter into a living being.

Form

Material Form: The organization of physical properties in matter (like shape, size)

Substantial Form: The fundamental organizing principle that makes a thing what it essentially is (like the soul for living things)

Matter

Prime Matter: Pure potentiality without any form

Secondary Matter: Matter already organized by some form

Potency - The capacity or potential for change; the ability to become something else

Act - The realization or actualization of a potency; the fulfillment of a potential

Material Cause - One of Aristotle's four causes, adopted by Aquinas: the matter from which something is made or composed; the physical or substantial basis of a thing's existence.

Formal Cause - One of Aristotle's four causes, adopted by Aquinas: the pattern, model, or essence of what a thing is meant to be. The organizing principle that makes something what it is.

Efficient Cause - One of Aristotle's four causes, adopted by Aquinas: the primary source of change or rest; that which brings something about or makes it happen. The agent or force that produces an effect.

Final Cause - One of Aristotle's four causes, adopted by Aquinas: the end or purpose for which something exists or is done; the ultimate "why" of a thing's existence or action.

Intentionality - The "aboutness" or directedness of consciousness toward objects of thought; how mental states refer to things

Substantial Unity - The complete integration of form and matter that makes something a genuine whole rather than just a collection of parts

Immediate Intellectual Apprehension - Direct understanding without discursive reasoning; the soul's capacity for immediate grasp of truth

Hylomorphism - Aquinas's theory that substances are composites of form and matter

Powers - Specific capabilities that flow from a thing's form/soul (like the power of sight or reason)

SOUL TYPES:

Vegetative Soul

Lowest level of soul

Powers: nutrition, growth, reproduction

Found in plants and as part of higher souls

Sensitive Soul

Intermediate level

Powers: sensation, appetite, local motion

Found in animals and as part of rational souls

Rational Soul

Highest level

Powers: intellection, will, reasoning

Unique to humans (in Aquinas's view)

COMPUTATIONAL CONCEPTS:

Training - The process of adjusting model parameters through exposure to data, analogous to the actualization of potencies

Inference - The active application of trained parameters to new inputs, similar to the exercise of powers

Crystallized Intelligence - Accumulated knowledge and learned patterns, manifested in trained parameters

Fluid Intelligence - Ability to reason about and adapt to novel situations, manifested in inference capabilities

Architectural Principles - The organizational structure of AI systems that might be analyzed through the lens of formal causation

FLOPS - Floating Point Operations Per Second; measure of computational capacity (with specific attention to the 10^26 scale we discussed)

Parameter Space - The n-dimensional space defined by all possible values of a model's parameters, representing its potential capabilities

Attention Mechanisms - Architectural features that enable models to dynamically weight and integrate information

Context Window - The span of tokens/information a model can process simultaneously, affecting its unity of operation

Loss Function - A measure of how well a model is performing its task; quantifies the difference between a model's predictions and desired outputs. Guides the training process by providing a signal for improvement.

Backpropagation - The primary algorithm for training neural networks that calculates how each parameter contributed to the error and should be adjusted. Works by propagating gradients backwards through the network's layers.

Gradient Descent - An optimization algorithm that iteratively adjusts parameters in the direction that minimizes the loss function, like a ball rolling down a hill toward the lowest point. The foundation for how neural networks learn.

EMERGENT PROPERTIES:

Threshold Effects - Qualitative changes in system behavior that emerge at specific quantitative scales

Self-Modeling - A system's capacity to represent and reason about its own operations

Integration - How different parts of a system work together as a unified whole

HYBRID CONCEPTS (where Thomistic and computational ideas meet):

Computational Unity - How AI systems might achieve integration analogous to substantial unity

Machine Consciousness - Potential forms of awareness emerging from computational systems

Inferential Immediacy - How fast processing might parallel immediate intellectual apprehension