Merry Christmas! This is more of a visceral reaction to the release of o3 that I mostly wrote a few days ago but, in the pre-Christmas prep and haste, haven’t had time to edit or hit the publish button.

It’s a bit amusing and delightful to be publishing this on Christmas and using the famous Douglas Adams’ story about the universe. But I’ve always found comfort in it. The idea that 42 is the answer to everything is a great laugh, except that Deep Thought tells them this with “infinite majesty and calm.” It has always reminded me that no matter how much dramatic intelligence, no matter what preposterous ceremony or ritual, or after seven and a half million years of waiting, no matter how many complications, that so often the answer we desire is right in front of us and far simpler than we can imagine..

As simple as a newborn child, sleeping in a manger.

“O Deep Thought computer," he said, "the task we have designed you to perform is this. We want you to tell us...." he paused, "The Answer."

"The Answer?" said Deep Thought. "The Answer to what?"

"Life!" urged Fook.

"The Universe!" said Lunkwill.

"Everything!" they said in chorus.

Deep Thought paused for a moment's reflection.

"Tricky," he said finally.

"But can you do it?"

Again, a significant pause.

"Yes," said Deep Thought, "I can do it."

"There is an answer?" said Fook with breathless excitement.

"Yes," said Deep Thought. "Life, the Universe, and Everything. There is an answer. But, I'll have to think about it."

...Fook glanced impatiently at his watch.

“How long?” he said.

“Seven and a half million years,” said Deep Thought.

Lunkwill and Fook blinked at each other.

“Seven and a half million years...!” they cried in chorus.

“Yes,” declaimed Deep Thought, “I said I’d have to think about it, didn’t I?"

— Douglas Adams, Hitchhiker’s Guide To The Galaxy

Just a few days ago I was lauding Google for releasing new stuff and upstaging poor old OpenAI.

I really should've known better. On the last day of their 12 days event they released o3 — skipping o2 in a self-aware homage to their horrible naming — and all indications are that o3 is quite a game changer.

I won't belabor the capabilities because everyone else is talking and hypothesizing about those. Instead, o3 has me thinking about vulnerable worlds.

Five years ago, Nick Bostrom proposed a thought experiment called the Vulnerable Worlds Hypothesis as a way to think about technology impact. He asked how the world would be different if nuclear weapons were very easy to make rather than very hard. What if the ability to have such a huge (negative) impact could be done in anyone's backyard shed? What would change? Bostrom's real idea is that the policy and implications are quite dramatic and that we need preventative global governance to handle this problem, but that's not the part that sticks with me.

Here's something we don't think about much with software. It requires gargantuan effort and knowledge to produce, just like a nuclear weapon. But once software is built, the marginal cost to distribute it is zero. Every software company relies on this. Microsoft or Adobe or whomever expend millions of man-hours and billions of dollars to produce Office365 or Photoshop but then millions of people want it and use it and the marginal cost to distribute the software to those millions of people is effectively zero and Moore's law made sure that it could be run locally or at low cost and for a few hundred bucks a year per person the companies make their money back and then some and everyone is happy.

In one dimension, software is just like a nuclear weapon: high expertise and skill requirements. But in another dimension, software is exactly the opposite: zero cost distribution.

LLMs so far function mostly the same way. OpenAI gathered some of the smartest people on the planet and spent large piles of dollars to buy GPUs and train GPT4. Then they released it to the world and made their money back based on distribution to millions of people. ChatGPT was, as we've heard, the fastest product ever to reach a hundred million users.

But LLMs require a lot more computing power at run time — for inference. You can't run them locally, you don't have enough computing power. You have to run them on cloud GPU hardware and it's expensive enough that they charge you for it. According to their API pricing page, the best GPT4 model is $10 for a million tokens. o1, their best reasoning model, is $15 per 1 million tokens.

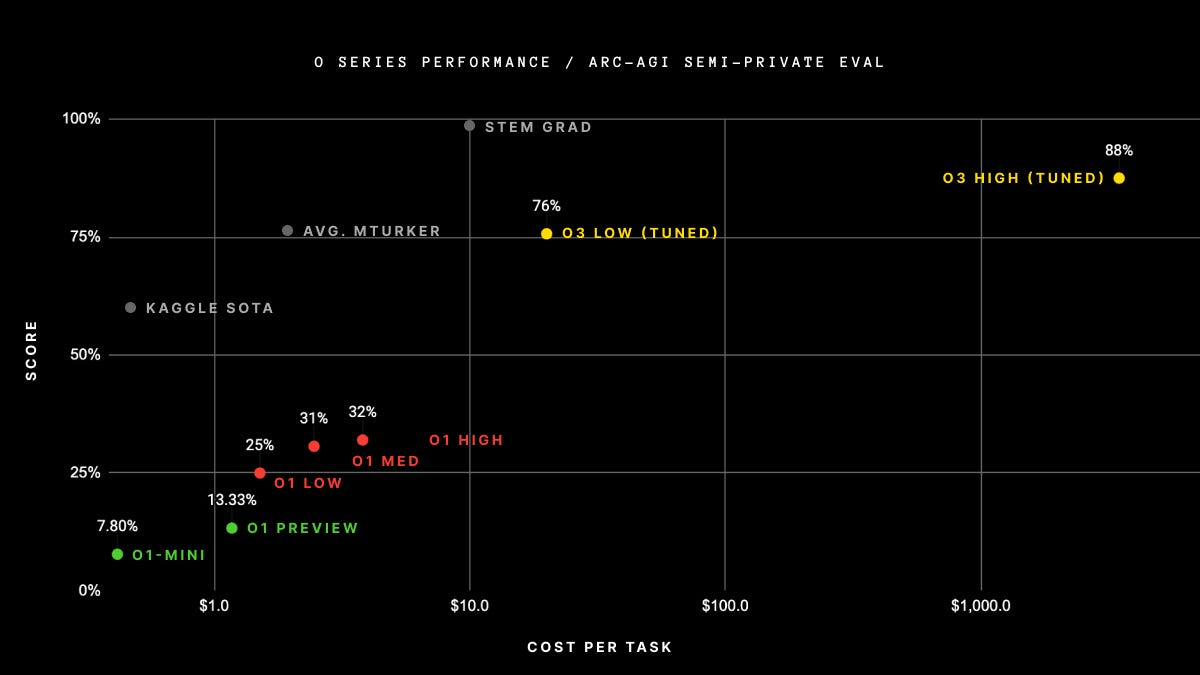

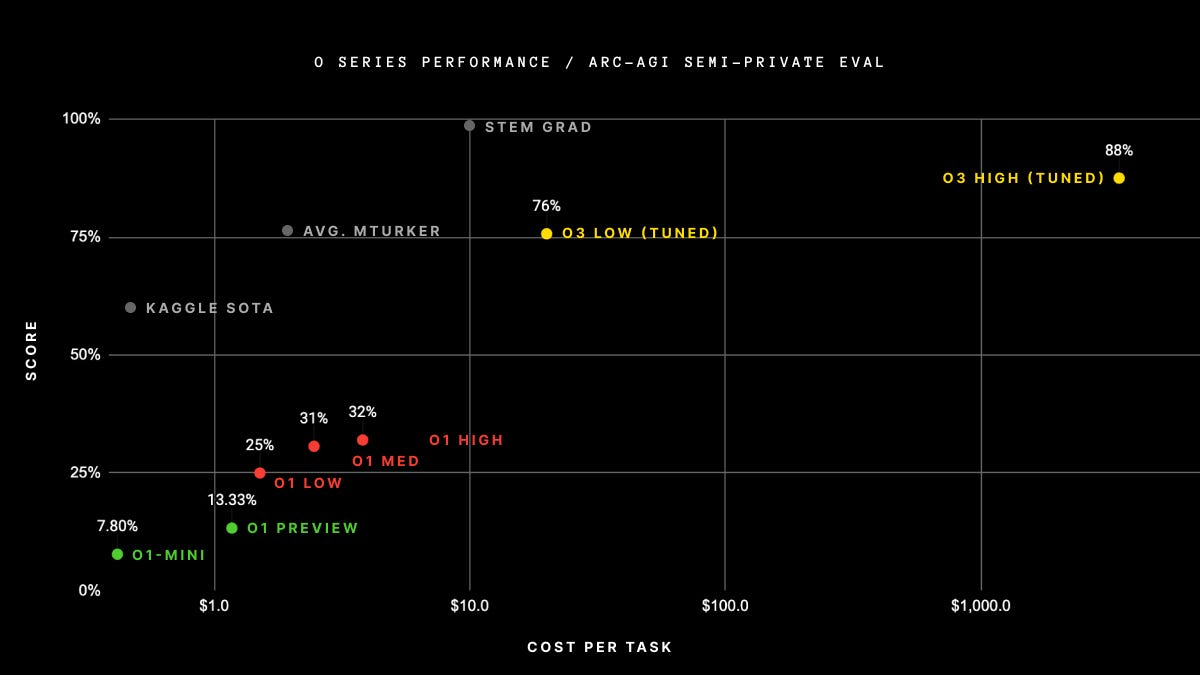

What's got me noodling is that o3 clearly demonstrates the power of test-time compute — chain of though is going to be a big deal. But let's look at that chart again, and this time pay attention to the x-axis.

o3 costs over $1000 per task. That is A LOT of compute. It's not world changing money, at least for one task, but it's expensive enough that it will give plenty of people pause. And if you have 1,000 or 10,000 tasks to accomplish what then? Problem definition and prompt engineering suddenly becomes much more important to get right from the beginning. We can imagine a world where the upfront investment for all sorts of new ideas is focused around prompt creation and optimizing test time compute.

The vulnerable worlds hypothesis gives us a framework to think about the relationships between the expertise and investment required for a technology and the amount of impact it can have on the world. Since the beginning of software, the distribution cost of the product was functionally zero. And hardware advances across the board have stayed ahead of complexity so that the real-time cost of running software is not much more than the cost of infrastructure and electricity.

If o3 is a sign of where we're going, that's going to get upended. The workload that LLMs are performing now, with near real-time responses, is far lower. They're useful tools but they aren't AGI. But lots of people are freaking out about o3. It is solving extraordinarily hard math problems. It is performing decision making processes and breaking down large tasks into discrete steps. It has ridiculously high ELO scores in competitive coding exercises. Extrapolate out and LLMs are going to do a whole lot more a whole lot better than humans can.

But it'll cost you.

A lot of software today is SaaS - software as a service. Chain-of-thought LLMs are going to be IaaS - Intelligence as a Service. Intelligence itself will become a utility. But unlike the traditional software paradigm, it might not democratize the same way. If IaaS goes the way of o3, we just might be able to compute the answer to life, the universe, and everything.. but it'll cost you.

On the other hand, there's stories like this.

Status and salary are directly correlated to the (perceived) level of intelligence required for the job. This is why engineers or white collar jobs cost more. If ramping up intelligence does NOT require this hyper-expensive chain of thought compute scale up and the token costs keep coming down, the status and salary of those intelligent jobs is going to drop precipitously. And the more common cases of AI use have aligned to the more typically deflationary effect of technology.

Which way does this thing go? The main point of this scree — if there is one, aside from general amazement at o3 — is that we are still so early that we're only just barely able to think about the appropriate ways to frame it. The vulnerable worlds hypothesis of Bostrom is useful to at least think about the dimensions that matter here. Humans are used to intelligence being a scarce commodity, one that is worth paying for and scaling up when available. This might get wildly expensive yet have incredibly high upper limits. Or it might diminish into something universal that anyone can access.

And nobody knows! Maybe it’s somehow both! These futures are wildly different than our current world. What's perhaps most weird about this is, and this has been repeated by more than a few people that are paying attention, I hardly even know how to talk to other people about this. o3 is a harbinger of the changes to intelligence but the community thinking about AI is still incredibly small and most people still neither know nor care about it. The world is going to change a lot in the next 10 or 20 years and it will catch us all by surprise.

I look forward to an answer and it better be 42.

"Good Morning," said Deep Thought at last.

"Er..good morning, O Deep Thought" said Loonquawl nervously, "do you have...er, that is..."

"An Answer for you?" interrupted Deep Thought majestically. "Yes, I have."

The two men shivered with expectancy. Their waiting had not been in vain.\

"There really is one?" breathed Phouchg.

"There really is one," confirmed Deep Thought.

"To Everything? To the great Question of Life, the Universe and everything?"

"Yes."

Both of the men had been trained for this moment, their lives had been a preparation for it, they had been selected at birth as those who would witness the answer, but even so they found themselves gasping and squirming like excited children.

"And you're ready to give it to us?" urged Loonsuawl.

"I am."

"Now?"

"Now," said Deep Thought.

They both licked their dry lips.

"Though I don't think," added Deep Thought. "that you're going to like it."

"Doesn't matter!" said Phouchg. "We must know it! Now!"

"Now?" inquired Deep Thought.

"Yes! Now..."

"All right," said the computer, and settled into silence again. The two men fidgeted. The tension was unbearable.

"You're really not going to like it," observed Deep Thought.

"Tell us!"

"All right," said Deep Thought. "The Answer to the Great Question..."

"Yes..!"

"Of Life, the Universe and Everything..." said Deep Thought.

"Yes...!"

"Is..." said Deep Thought, and paused.

"Yes...!"

"Is..."

"Yes...!!!...?"

"Forty-two," said Deep Thought, with infinite majesty and calm.