Hands and Faces

Posted at risk of upsetting my incredible wife. Hope you enjoy, it may be my last post ever.

When DALL-E first came out, all those eons ago in 2021, I remember my amazement. This was the first image that really got me.. the prompt: “a bowl of soup that looks like a monster knitted out of wool”

And since then, we’ve gotten an ongoing stream of delightful and fanciful images that are only held back by imagination. Midjourney and Stable Diffusion are filled with sci-fi robots (duh), great wordplay, and granite Roman busts in living color. What once would take an artist weeks is now accomplished with a small set of prompts. We’ve got 50s comic book versions of Game of Thrones and Bollywood India versions of Harry Potter. And pretty much any other version of your favorite characters you could ask for.

Most of the AI art we see is far enough away from the real world that it appeals to our imagination. Instead of critically recognizing something from our daily life, we can engage our fantasy drive and accept the new vision presented to us. Even the everyday documentarian photo realistic results are incredible. There are plenty of threads like this one from CNet comparing real and fake photos.

One of the surprising things that we've learned from AI image generation is exactly what matters to make a good image, especially of people. It’s easier to see real images when the subjects are anonymous. It’s harder when you know the subjects. So we’re going to look at some personal examples to see how things go.

I've been playing with Photoai.com - from the prolific @levelsio - and the results are both amazing and demonstrate this perfectly. Photoai.com is really slick - you upload a set of photos of a person (your model) and this is used as training data (few-shot) to generate an (AI) model of your (person) model. For example, here's a headshot of my wife that she used for her business.

That is an awesome headshot and not just because I’m biased and think my wife is gorgeous! It’s also a really good representation of her.

Now let’s look at a professional headshot of me.

Or this one, which I’m currently using as an avatar until I can improve it.. more on that in a second.

If you don’t know either of us, these two shots will look just pretty good. But if you know my wife and I well, you’ll see that the ones of me are.. not quite right. Since we’re building these with our own trained model, the quality difference here stems from the same place as from any other modern AI model: data. In my family, I’m the photographer. So I have a large number of high quality photos of my wife and kids and apparently many less of myself! I’m going to retrain the model on some newer pictures of me (side note: I had to wait a month because of a ridiculous case of impetigo) and hopefully get some better output.

But even with my wife’s much better model some of the output struggles. Everything looks amazing and photorealistic except for tiny details.

What’s off in this AI photo? The skin appears retouched, resembling the smooth finish achieved by skilled studio photographers using techniques like frequency separation. The collarbone is a little weird. The hair seems right. The eyes are right. Nose, right. Chin, right. The teeth are definitely a little different. But I find it really hard to point out exactly what separates the two.. and yet, I don’t know who that is, but it’s definitely not her.

And then there’s our hands. Check out all these great images. The faces are very close, as before, but in these images our hands are seriously jacked up too.

Image generation models have had a helluva time getting hands right. Pieter Levels has talked a lot about how much work he’s put into making hands better in PhotoAI.

Hands have been one of the hardest subjects for artists to get right for centuries. Da Vinci spent an inordinate amount of time on them. They are a close second to faces in what we look at to understand “accuracy”, but hands aren’t an identifying feature of individuals. The human brain is highly tuned on facial anatomy. There seems to be no equivalent for hands. And yet they will ruin an image just as much.

Why?

I think there are a couple of reasons. First, hands are nearly always a part of the subject. If the background of an image is all jacked up you may notice eventually, but it won’t draw the same level of immediate critique as the primary subject. This seems obvious, but it’s worth saying. For example in this remarkably jacked image of me - one I aspire to by the way - the background is physically wrong. There are a gazillion benches that make no physical sense, but they’re out of focus and in the image bokeh and so our brain goes “good enough” and moves on.

Second, hands are far more dynamic than we think. A common vanishing point in a background may vary a lot in terms of what objects are vanishing, but the attributes of the behavior is consistent. Hands articulate a lot. They have 27 degrees of freedom and there’s likely more diversity in hand placement in training data.

Look past the floating dog head on my shoulder in this image and you’ll see a background guy with a wildly contorted arm. Maybe he’s trying to scratch his back but I sure can’t do that. It doesn’t ruin the shot because it’s background, but it makes it a little weird.

Last is.. vibes? I want to go back to faces for a second and zoom in. The really great headshots of my wife still have some very odd small details. Teeth and ears are consistently weird.

That earring hangs completely off the ear, attached by a stroke that I could see being a part of a good drawing. And the teeth are completely wrong on close-up, but in a smaller, more complete image these shapes seem to produce the same pixelated patterns that are pleasing to the human eye.

There’s been some studies on the specific muscles and facial characteristics that present emotion or recognition. AI gets images right when it nails these and wrong when it doesn’t. And if there are other small differences outside of those details - even if it’s on the subject and on the face or hands - they just don’t matter as much.

One common thesis about AI models is that they are building a model of the real world. To properly predict the next token, Ilya and others will say, is the same as understanding the entire world and being able to manipulate it.

Either image generation models are different or not as evolved as LLMs or specific parts of our world - like our hands - are more diverse and complex than we realized.

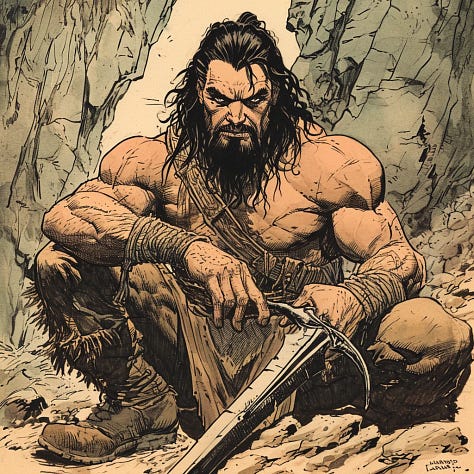

The thing is that the vibes carry AI results a really long way. This is one of my favorite threads on /r/midjourney and here’s one of the stylized images:

That’s an awesome image of Dadhood. It evokes emotion and happiness and - when combined with the thread title “I miss you Dad” - bittersweet memory. The fact that Dad seems to have six fingers on both hands and a hoodie that blends with his son’s sweatshirt takes away none of that.

So vibes win. But vibes do not a world model make.

Some of the early takes on AI video generation have some interesting results. Next frame prediction clearly goes haywire here too, like this guy’s horrifying vacation videos.

I’m more willing to buy the argument that token prediction is world understanding in a constrained vocabulary like English with on the order of 100,000 words or so. I feel much more skeptical about image and video.

One action that would make me much less skeptical is well-defined editing that lets a user manipulate the model. I think of this as a raytracing problem. We’ll cover that next.